JEP-380: Unix domain socket channels

Michael McMahon on February 3, 2021The SocketChannel and ServerSocketChannel API provides blocking and multiplexed non-blocking access to TCP/IP sockets. In Java 16, these classes are now extended to support Unix domain (AF_UNIX) sockets for internal IPC within the same system. This post describes how to use the feature and also illustrates some other use cases, such as communication between processes in different Docker containers on the same system.

What are Unix domain sockets

TCP/IP sockets are addressed by IP address and port number and are used for network communications whether on the internet or private networks. Unix domain sockets on the other hand are only for inter-process communication on the same physical host. They have been a feature of Unix operating systems for decades but have only recently been added to Microsoft Windows. As such, they are no longer confined to Unix. Unix domain sockets are addressed by filesystem pathnames that look much the same as other filenames: eg. /foo/bar or C:\foo\bar.

Why use them?

There are a number of benefits to using Unix domain sockets for local IPC.

- Performance

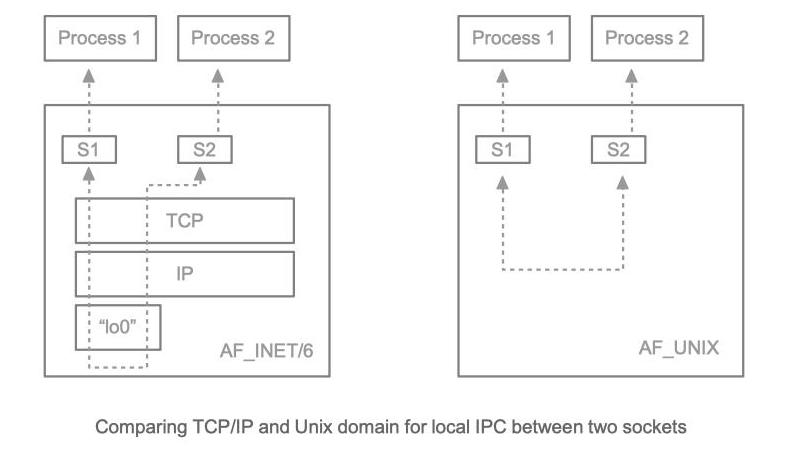

Using Unix domain sockets instead of loopback TCP/IP (connections to 127.0.0.1 or ::1) bypasses the TCP/IP stack with consequential improvements in latency and CPU usage.

- Security

First, when local access to a service is required, but not remote network access, then publishing the service as a local pathname, avoids the possibility of the service being accessed unexpectedly from remote clients.

Second, because Unix domain sockets are addressed by filesystem objects, this means standard Unix (and Windows) filesystem access controls can be applied to limit access to a service by specific users or groups, as required.

- Convenience

In certain environments like Docker containers, it can be troublesome to set up communication links between processes in different containers using TCP/IP sockets. An example below describes how to communicate via a Unix domain socket in a shared volume between two Docker containers.

- Compatibility

As will be seen below, once the obvious addressing differences between TCP/IP and Unix domain sockets are considered, they can be expected to behave compatibly. In particular, once a channel has been created and bound to an address, existing code that is agnostic to addressing should continue to work unmodified whether it is given a Unix domain or a TCP/IP socket. So, Selectors for example, can handle either type of socket without any code change.

How to use Unix domain sockets

Existing APIs (pre Java 16) continue to create TCP/IP sockets. Therefore, the new APIs described below must be used to create and bind Unix domain sockets.

First, to create a Unix domain client or server socket you specify the protocol family as shown below:

// Create a Unix domain server

ServerSocketChannel server = ServerSocketChannel.open(StandardProtocolFamily.UNIX);

// Or create a Unix domain client socket

SocketChannel client = SocketChannel.open(StandardProtocolFamily.UNIX);

The second difference comes when you bind the socket to an address. To support this, a new type UnixDomainSocketAddress is defined which must be supplied to one of the existing bind methods.

UnixDomainSocketAddress instances are created from either of two factory methods as shown below:

// Create an address directly from the string name and bind it to a socket

var socketAddress = UnixDomainSocketAddress.of("/foo/bar.socket");

socket.bind(socketAddress);

// Create an address from a Path and bind it to a socket

var socketAddress1 = UnixDomainSocketAddress.of(Path.of("/foo", "bar.socket"));

socket1.bind(socketAddress1);

The one exception to the rule stated above where existing methods (defined prior to Java 16) always create TCP/IP sockets is the convenience open method that takes a SocketAddress and returns a client socket connected to the given address. In this case, the protocol family is inferred from the given address object, as shown below:

var inetAddr = new InetSocketAddress("host", 80);

var channel1 = SocketChannel.open(inetAddr);

var unixAddr = UnixDomainSocketAddress.of("/foo/bar.socket");

var channel2 = SocketChannel.open(unixAddr);

In the example above, channel1 has a TCP/IP protocol family INET or INET6 whereas channel2 has a UNIX protocol family.

There is one other API difference between the two kinds of socket. The set of supported options are different and as you might expect, TCP/IP specific options are not supported by Unix domain sockets.

And that’s it. These are the API differences between the two kinds of sockets. However, read on to learn about some more specific aspects of Unix domain sockets that you should be aware of.

A deeper look at Unix domain sockets

As the previous section shows there is some commonality between the addressing models, but there are significant differences too that you should be aware of.

- Socket files exist independent of their sockets

TCP/IP port numbers are closely tied to the sockets that own them. In particular, when a TCP/IP socket is closed, its port number can be assumed to be released for re-use (eventually). That is not the case for Unix domain sockets.

When a bound Unix domain socket is closed, its filesystem node remains until the file is explicitly deleted. No subsequent socket can bind to the same name while the socket file (or any other kind of file) exists with the same name. For this reason, it is good practice when cleaning up after a server shuts down to ensure that its socket file gets deleted.

- Client sockets do not need to be bound to a specific name

With TCP/IP, if a client socket is not explicitly bound, then the OS chooses a local port number implicitly. In the case of Unix domain sockets it is slightly different and in that case, the socket is said to be unnamed rather than having a name chosen for it. Unnamed sockets do not have a corresponding filesystem node, and therefore do not have to worry about deleting any file after socket closure.

Client sockets can still be explicitly bound to a name but in that case, the requirement to delete the socket file after the socket is closed, remains.

- Server sockets are always bound to a name

Clearly, servers must always be bound in order to be accessible to clients. However, the name does not always need to be “well known”. So, if you call bind(null) with a TCP/IP socket the system automatically chooses a port number for you and you can use some out of band mechanism to pass that information to clients. A similar mechanism exists with Unix domain ServerSocketChannels. If you call bind(null) then the system automatically picks a unique pathname to bind to in some system temporary location. See the API doc for more information about this and how the location can be changed. Note also, for both kinds of ServerSocketChannel, the getLocalAddress method returns the actual bound address.

- Unix domain socket addresses are limited in length

All Operating Systems impose a strict limit on the length of a Unix domain socket address. The value varies by platform and is typically around 100 bytes. This can pose a problem if particularly deep directory pathnames are in use.

The problem can be avoided in a number of ways such as by using relative pathnames. The actual files can be located in an arbitrarily deep directory and so long as the same working directory is used by the server and its clients, the relative pathname can be arranged to be less than 100 bytes in length.

The problem can also occur with automatic binding of servers (bind(null)) if the system temporary directory has a particularly long name. As mentioned above, there are platform specific mechanisms for overriding the choice of this directory.

Obtaining remote user credentials

JDK 16 also has an additional (platform specific) socket option (SO_PEERCRED). This works on Unix systems to return a UnixDomainPrincipal which encapsulates the username and groupname of the connected peer.

Using Unix domain sockets to communicate between two Docker containers

In this example, the simple Client and Server below will be installed in two different Alpine Linux Docker containers with JDK 16 already installed. A shared volume on the host (called myvol) is created and mounted on both containers (in /mnt). The server, running in one container then creates a ServerSocketChannel bound to /mnt/server and the client, running in the second container, connects to the same address.

import java.net.*;

import java.nio.*;

import java.nio.channels.*;

import java.nio.file.*;

import java.io.*;

import static java.net.StandardProtocolFamily.*;

public class Server {

public static void main(String[] args) throws Exception {

var address = UnixDomainSocketAddress.of("/mnt/server");

try (var serverChannel = ServerSocketChannel.open(UNIX)) {

serverChannel.bind(address);

try (var clientChannel = serverChannel.accept()) {

ByteBuffer buf = ByteBuffer.allocate(64);

clientChannel.read(buf);

buf.flip();

System.out.printf("Read %d bytes\n", buf.remaining());

}

} finally {

Files.deleteIfExists(address.getPath());

}

}

}

// assume same imports

public class Client {

public static void main(String[] args) throws Exception {

var address = UnixDomainSocketAddress.of("/mnt/server");

try (var clientChannel = SocketChannel.open(address)) {

ByteBuffer buf = ByteBuffer.wrap("Hello world".getBytes());

clientChannel.write(buf);

}

}

}

And finally the Docker commands to make it all work. This assumes an existing image with JDK 16 already installed and Client and Server are already compiled with javac on the host.

// creates a shareable volume called myvol

docker volume create myvol

// In one window run an image mounting the shared volume

// Assume alpine_jdk_16 is a local image with jdk 16 installed

docker run --mount 'type=volume,destination=/mnt,src=myvol' -it alpine_jdk_16 sh

// In 2nd window run same

docker run --mount 'type=volume,destination=/mnt,src=myvol' -it alpine_jdk_16 sh

// In 3rd window: get container ids (substitute ids below as appropriate)

docker ps

docker cp Client.class 3c7e76e9f1a2:/root

docker cp Server.class 687de1ed186c:/root

// Go back and run Server in 687de1ed186c and Client in 3c7e76e9f1a2

Inherited channels

The inherited channel mechanism has existed since Java 1.5 and has always been able to return a TCP/IP socket when the Java virtual machine has been launched from some mechanism like inetd on Linux or launchd on macOS. This mechanism now supports Unix domain sockets, assuming the underlying launching framework also supports them.

Limitations

JEP-380 concentrated on features that are common across the main supported platforms. The following Unix/Linux specific features are not currently supported, but could be considered in future work:

-

Linux abstract paths. Abstract paths are not linked to filesystem objects and therefore behave more similarly to TCP/IP port numbers in terms of not existing independent of the owning socket.

-

Datagram support

-

Support for

java.net.Socketandjava.net.ServerSocket. The legacy socket networking classes are tied to TCP/IP mainly due to their fixed use ofjava.net.InetAddressfor addressing. -

Sending channels through Unix domain

SocketChannels. Because unix domain sockets are confined to a single system it is possible to use them to transmit objects other than data between connected peers. In principle, any object represented by a file descriptor can be sent between processes in this way. In practice, it would be most likely to limit the capability to NIO channel objects.

Conclusion

This was a brief introduction to Unix domain sockets in SocketChannel and ServerSocketChannel in Java 16. As always, consult the NIO channels API doc for the complete specifications. Platform specific socket options are defined in jdk.net.